Introducing Playtesting

Building a Lightweight UX Feedback Loop for Hyper-Casual Games

Role

Product designer

Tasks

UX Research

Game Design

A user-centred playtesting process introduced at Playstack to improve design quality, fix friction points early, and reduce post-launch risks.

The Challenge

While working at Playstack in 2022, I noticed that some hyper-casual mobile games were being released without undergoing any structured user testing. This meant issues with gameplay, UI, and onboarding often went unnoticed until launch—when fixing them became costly or too late to impact performance.

One game in development, Idle Sea Park (developed by Amuzo), highlighted this gap. I initiated and led a project to introduce a simple, scalable playtesting process that could be used during the development stage to improve quality and surface friction points before release.

This was the first time playtesting was formally introduced at Playstack.

Role & Collaboration

As a Product Designer, I designed and implemented the playtesting process from scratch. I collaborated with project managers and the analytics team to align on test goals, and worked closely with the development studio to ensure the feedback loop was practical and actionable.

The impact

Streamlined the feedback loop between stakeholders and developers

Provided evidence-based justification for design changes

Served as a model for future testing across other titles at Playstack

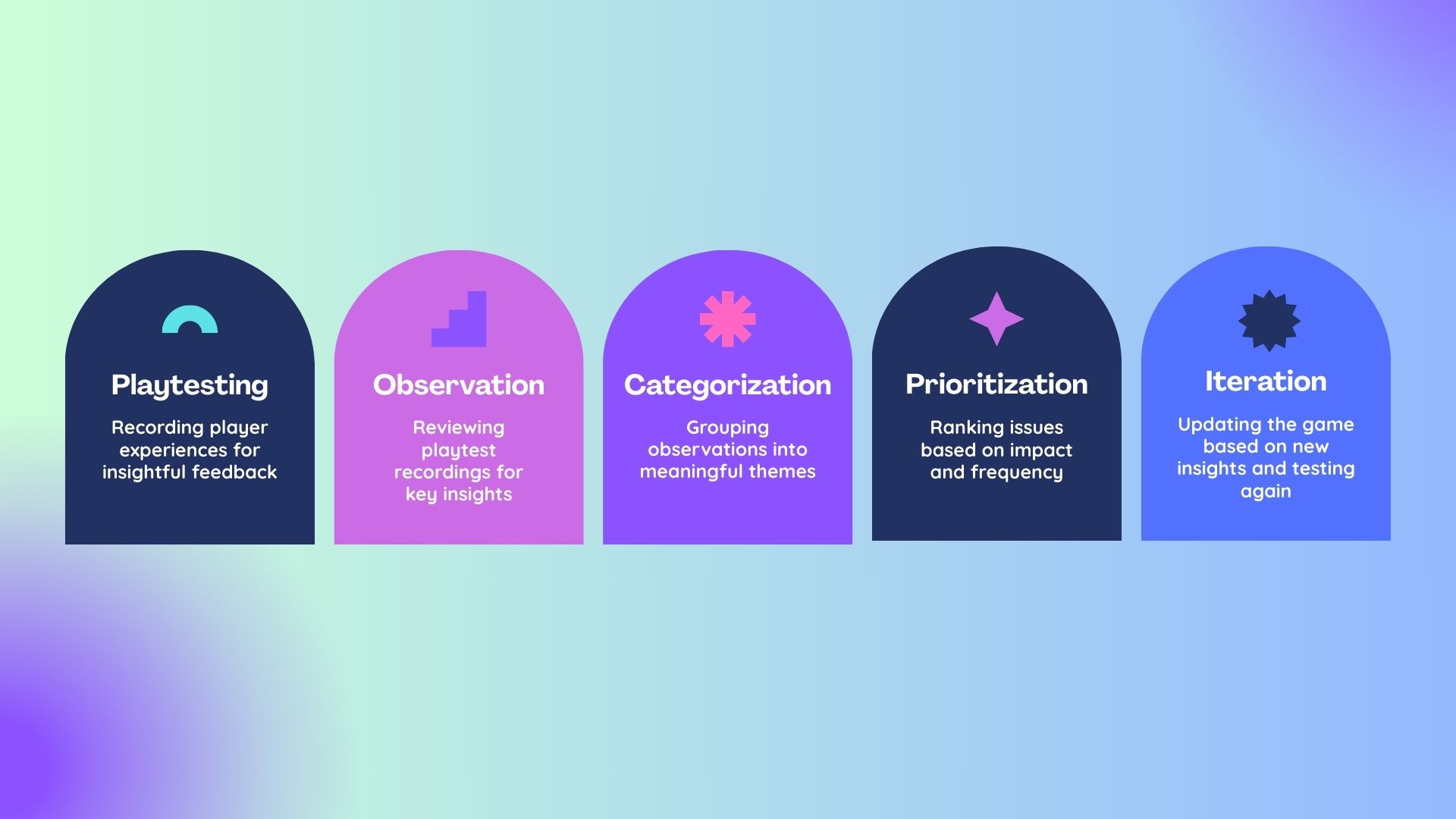

The Process

1. Recruitment & Setup

The goal was to gather real, unbiased reactions from people playing the game for the first time. For the first round, I recruited internal testers across the company — people who weren’t part of the project — to get quick, frictionless feedback. For the second round, I used an external game testing platform to gather input from users more aligned with our target player base.

Participants were asked to play Idle Sea Park for at least 15 minutes while recording both their screen and voice, speaking aloud as they played. This “think aloud” method allowed us to capture not just what users did, but what they thought, questioned, and misunderstood in real time.

2. Playtesting Sessions

We intentionally kept the sessions unmoderated and natural, to avoid influencing player behavior. This revealed:

Points of hesitation or confusion

Unclear UI elements or feedback

Whether the game’s core loop was understood and engaging

Emotional reactions like boredom, delight, or frustration

The screen + voice recordings gave us rich, context-heavy data to work with, without requiring formal lab setups or large budgets.

“Very good but the water isn’t clear so I can’t see the fish nor the decor. And way to many buttons on the screen that I can barely see the actual game.”

“This game is a lot of fun and the fish are so cute and colorful. Getting more money is a bit hard, getting new fish when you are about to finish one of the tanks is a pain and you get such little money from the exhibitions”

Observations made by players after the testing

3. Observation Logging

I watched each video closely and created a detailed log in a spreadsheet, noting:

Actions players took

Comments they made

Moments where they got stuck, confused, or surprised

Every observation was tagged by tester and timestamped, making it easy to scan across multiple players and compare patterns.

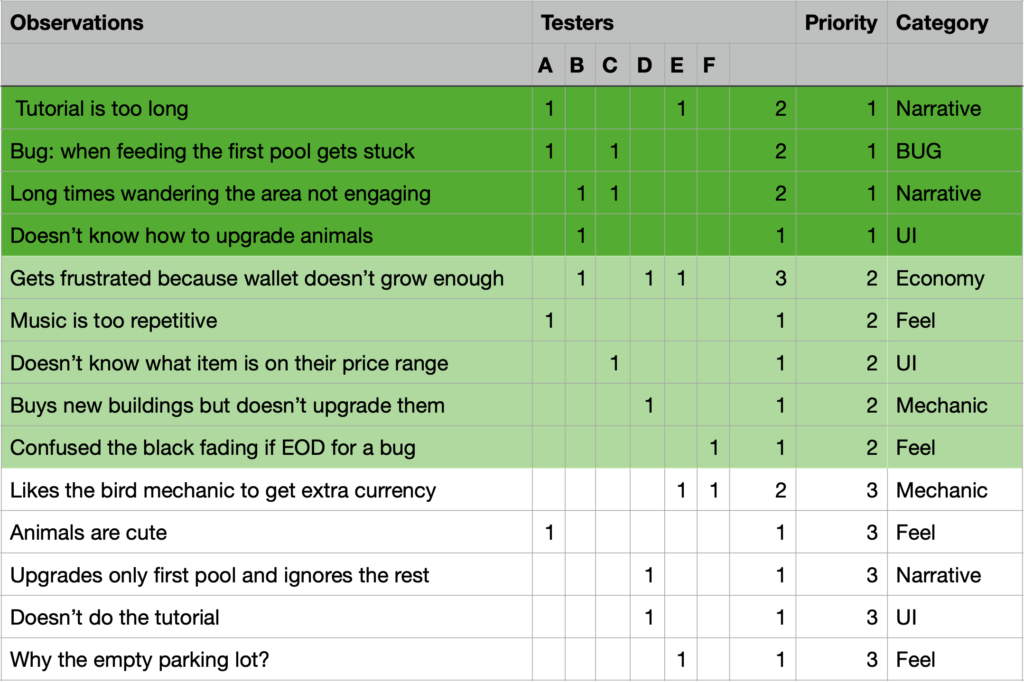

4. Insight Categorization

Once all observations were logged, I organized them into thematic buckets: 🧩 Mechanic, 💡 UI, 📦 Economy, 📖 Narrative, 🎮 General Feel and 🐞 Bugs (these were flagged immediately and shared directly with the dev team for quick fixes.)

5. Prioritization & Handoff

Working with the product manager, we reviewed all issues and ranked them based on:

How many testers encountered them

The severity of the issue (e.g. breaking the flow vs minor confusion)

The impact on player retention or engagement

From there, we selected the top 5 most important insights, and I wrote up concrete, actionable recommendations for the development studio — not just what was wrong, but how they could improve it in the next build.

6. Iteration & Retesting

Once the studio had made changes, we repeated the process — this time focusing on:

Whether the key issues were resolved

Whether new issues emerged as a result of the changes

How players responded to updated flows and features

This second round validated several key fixes (like the revised tutorial and improved pacing), and showed that the feedback loop was working as intended.

Example of a spreadsheet with observations already prioritised.

Idle Sea Park playtesting outcomes

The process led to immediate and tangible improvements in Idle Sea Park, including:

A revised tutorial that better supported early player understanding

A change in music, based on emotional tone mismatch feedback

Fixes for several bugs that hadn’t been flagged internally

A refined pace in non-skippable scenes, improving flow and reducing drop-off risk

Final Thoughts

This project demonstrated the value of embedding user feedback early in the development of hyper-casual games. By introducing a lean, repeatable playtesting process, we were able to surface real user pain points before release, improve gameplay quality, and create a structured communication channel between designers, stakeholders, and developers.

Even with limited resources, thoughtful testing led to better decisions—and better games.